One of our customers recently reported that some parts of his site were not properly crawled by our scanner (Acunetix Web Vulnerability Scanner). Upon investigation, I found the cause of the problem.

When a specific page was visited, a cookie with a random name and a large value was set. This page had many parameters and the crawler had to request this page multiple times to test all the possible page variants. Our crawler saves all the cookies it receives in a “cookie jar” and subsequently re-sends them in future requests to the same domain. After each visit to this page the cookie jar quickly contained a lot of cookies.

The crawler was sending all these cookies with each request:

Cookie:

TZBOG1kGIX3dwbGchhKZ=F8D1050A65237C88CE946A94A78B8F78AA2DB6DC619AF7775A3FDD22BDDD7

A3FF8D1050A65237C88CE946A94A78B8F78AA2DB6DC619AF7775A3FDD22BDDD7A3F;

lc5vBa8MiHv6vkY9T5Ff=0342699351D8B006450880185FEA6330861715F45545EB3859821AF6DDEE8

F6A0342699351D8B006450880185FEA6330861715F45545EB3859821AF6DDEE8F6A;

… more cookies …

All these cookies were sent together with a Cookie header line. When the Cookie header value became too large the server started to reject our requests with the error code 400 Bad Request including the reason: “Your browser sent a request that this server could not understand. Size of a request header field exceeds server limit.”

At this point, the crawler could no longer visit any pages from this site because each request was be denied. This got me thinking.

What if an attacker does this to cause a denial of service (DoS) condition? Would it work?

I remembered that Google’s Blogspot allows Javascript code. So, I created a POC page that will create a set of large cookies via Javascript. Here is how it works:

- The victim visits a page from a blogspot blog (here is a proof of concept page).

This page sets 100 large cookies (size 3000). The cookies are scoped on path / and on the main domain (blogspot.ro in this case). These cookies are set to never expire. - When visiting the same page again, the browser will concatenate all these cookies together and set a very long Cookie: header. The server will reject the request because the header line is too big (413 Request Entity Too Large). Other web servers return 400 Bad request.

- Since the cookies were scoped to the main domain, the victim will not be able to visit the initial blog until he deletes his cookies.

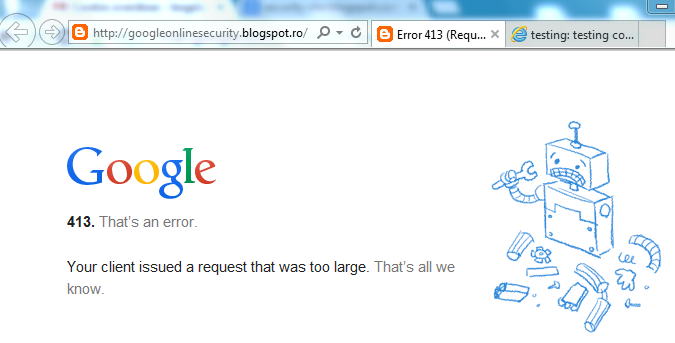

- Actually, he will not be able to visit ANY blogs from blogspot. For example, Google Security’s blog:

Basically, it’s a denial of service by cookies. I tested this on blogspot with Internet Explorer. Chrome is not affected on this domain (blogspot.ro). On this domain Chrome sends 3 requests and the last one without cookies but it’s affected on other domains.

I’ve used blogspot as an example but any domain where it’s possible to set cookies has the same problem.

The blogspot issue was reported to Google but they don’t think it can be fixed on the application level. I totally agree with them. Their response was:

…

I definitely agree that this is not ideal behavior,

but realistically this isn’t something that a single Web application

provider might be able to solve. If you have a proposal for solving this

that you could present to browser vendors, they might be interested…

So, who should fix this problem, and at what level should it be fixed? Can it be fixed at all?

EDIT: Seems that someone has already written about this issue here.

Get the latest content on web security

in your inbox each week.